Israel Streaming Project

| Status | Final |

|---|---|

| Author | Hendrik Vermeylen (KASRL) (Unlicensed) |

| Date | 05.07.2019 |

| Version | 1.0 |

PROJECT OVERVIEW

Project Summary

A video measurement project covering both digital content and video ads.

Participating Broadcasters

Current Project Status

Tender.

Project Teams and Resources

- Kantar Online Data & Development Unit in Germany

Who Do I Contact and When?

- KA_DE_support@kantarmedia.com - (Support Kantar Tagging (KASRL)

More about us

Kantar Online Data and Development Unit is a technology-oriented company located in Saarlouis, Germany. It was founded as a spin-off of the renowned German Research Center for Artificial Intelligence (DFKI), Saarbrücken.

Realizing as early as 1995 that the ever-growing global importance of web-based information dissemination and product marketing would require more and more sophisticated solutions for measuring online audiences,

spring began to develop an integrated technology for measuring usage data, estimation algorithms, projection methodologies and reporting tools which soon began to draw the attention of innovative corporations

and national Joint Industry Committees.

Partnering with JICs, spring’s technology for collecting and measuring usage data is now well-tried and tested and has helped to define the standard for audience reach measurement of advertising media in UK, Norway, Finland, Baltic States, Germany, Switzerland, Romania and many other countries as well as to a number of broadcasters, publishers and telecommunication companies.

In 2011, the company became a wholly-owned subsidiary of Kantar, a global industry leader in audience measurement for TV, radio and the web.

Today, we are one of the leading pan-European companies in site-centric and user-centric Internet measurement, online research and analysis.

LIBRARY CODE BASE AND ROADMAP

Library Downloads

WHICH LIBRARY DO I NEED?

You can find our currently available libraries below. We will work with you to identify which library you should use as part of your initial implementation planning discussions.

STREAMING LIBRARIES AND JAVASCRIPT DOWNLOADS

Type Desktop Player | Notes | Release Date | Download link |

|---|---|---|---|

| Streaming JavaScript | For the web environment or other JavaScript capable environments (not natively supported) | kantarmedia-streaming-js-3.0.zip | |

| Type Mobile Player | Notes | Release Date | Download link |

| Library for iOS | kantarmedia-streaming-iOS-1.14.zip | ||

| Library for Android | kantarmedia-streaming-android-2.0.0.zip | ||

| Type Big Screen Player | Notes | Release Date | Download link |

| Library for tvOS | kantarmedia-streaming-tvOS-1.14.zip | ||

Type Game Console Player | Notes | Release Date | Download link |

| Library for Xbox | Supports Xbox One | ||

Type Settop Box | Notes | Release Date | Download link |

| Library for Roku | kantarmedia-streaming-roku-barb-1.4.2.zip |

Help with Adapters

WHY DO I NEED AN ADAPTER?

When a library specifically suited for your player is not available, you can still use our measurement by implementing an "adapter". This is a small piece of code that ensures the connection between the Kantar library and your player. It is typically written by yourself; and Kantar can provide consultancy to aid you with this.

Documentation about how to integrate the libraries by using an adapter is available in the general documentation Implementation of Stream Measurement.

Some examples that require an adapter are here below.

MICROSOFT SILVERLIGHT

BRIGHTCOVE

For Flash: Brightcove Streaming Sensor 1.2.0 (English Version)

Not available natively yet for Android or iOS. It can be done currently with the available Android and iOS Spring libraries + a custom adapter to connect the library with the Brightcove API.

YOUVIEW

YouView runs a proprietary version of Flash and they do not use "flash.net.NetStream" but "MediaRouter" instead. An adapter is needed in this case to extend flash.net.NetStream and grab information from MediaRouter such as the position and duration. It is similar to what is demonstrated in NetStream adapter for the flash.media.Sound object example in the documentation.

The library does not rely on flash.external.ExternalInterface being available or cookies. The ExternalInterface is normally used for passing an "unload" call from the browser back to the library in case the browser is being closed. However, this "unload()" method can also be triggered from inside the Flash application itself.

UNDERSTANDING HOW TO IMPLEMENT THE SPRING LIBRARIES

Our Measurement Ethos

The Kantar technology was conceived and developed to be as platform-agnostic as possible.

Every video player has at least the possibility to report a playhead-position and the duration of the video (enables the user to see how long the video is and can keep track of the progress). These two variables form the basis of our measurement system, and they are common in every environment.

We capture a combination of environment-specific identifiers and additional metadata to attribute each streaming heartbeat to a session and a device.

Documentation

This is one of the most important documents because it describes in a general way how the Kantar measurement works. Reading and understanding this document is crucial to taking full ownership of the implementation process.

Our comprehensive Implementation of Stream Measurement document describes how the Kantar libraries work. It includes sample implementations and guidelines for writing a custom adapter for our libraries.

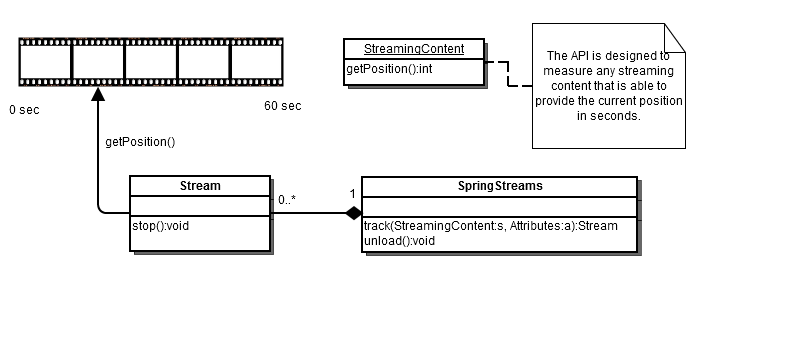

In order to measure any streaming content, a sensor on the client side is necessary, which measures which sequences of the stream were played by the user. These sequences can be defined by time intervals in seconds - therefore, the player or the playing instance must be able to provide a method for delivering the actual position on the stream in seconds.

The regular reading of the current position allows the tracking of all actions on a stream. Winding operations can be identified, if there are unexpected jumps in reading out the position. Stop or pause operations are identified by the fact, that the current position will not change.

User actions and operations like stop or pause are not measured directly (not player event based) but are instead derived from measuring the current position on stream.

Specific app library documentation are included in the library deliverables themselves.

Step-by-Step Guide

The step-by-step guide gives a graphical and concise overview of HOW, WHEN, and WHY to measure. We recommend to have look at it:

General metadata tagging instructions

It is essential that you ensure the standardised functions and values are passed in your library implementation.

How to map:

| Metric / Dimension | Description | Variable Namespace in the Library | Required for Library Functionality | Source | Notes | |

|---|---|---|---|---|---|---|

| 1 | sitename | unique Kantar system name per broadcaster - assigned by Kantar | sitename | mandatory | Assigned by Kantar | NOTE: You will be assigned "test"-sitenames for testing purposes! |

| 2 | player | broadcaster website or app player being used | pl | mandatory | Free choice | |

| 3 | player version | version of media player or app being used | plv | mandatory | Free choice | "1.4.1.1", "1.0.5" |

| 4 | window dimension width | width of the stream window, embedded or pop-out | sx | optional | Pass value on to Library | recommended although can be blank where unavailable |

| 5 | window dimension height | height of the stream window, embedded or pop-out | sy | optional | Pass value on to Library | recommended although can be blank where unavailable |

| content id / program id | an ID describing the content | cq | mandatory | Following IARB convention | this parameter is to be used in OD-content. live-content does not need to have a content id (cq=0) | |

| 7 | stream | description of the content stream (activity type/livestream channel id) | stream | mandatory | Following IARB convention | Descriptors of the type and delivery of content, not an identifier of the content itself. ON-DEMAND LINEAR (LIVESTREAM) For example: a programme that was originally broadcasted starting on 24/09/2019 at 13:13:00 Tel Aviv local time, will start from playhead position “1569276780”. |

| 8 | content duration | total length of the video being played, reported in seconds | dur | mandatory | Pass value on to Library |

|

Before you start

Once you have read the documentation, and before you begin your implementation, please contact us so we may review together the behaviour of your player and therefore the scope of the implementation.

FROM IMPLEMENTATION TO PUBLICATION - PROCESS

The process of verifying your stream implementation follows four big steps: Unit testing, Comms testing, Go-live acceptance testing, and Operational testing.

Unit Tests for Initial Implementation

By the time the broadcaster gets his hands on a library, the package has already run through unit tests that have been conducted by Kantar.

Communications Tests

In this phase the http-requests that are being sent from the Kantar library inside your player to the collection servers are tested and verified.

Desktop Player (“dotcom”) Streaming Measurement implementations:

For desktop player implementations, you will need to observe the log stream data and verify the content of the heartbeats. You do this by running a simple analysis of the http-requests sent from and to a browser whilst your webplayer is operating.

There are NO warnings or error messages produced using this method, it is a simple tracking of the http requests sent to the Kantar measurement systems.

You can observe an example of a correct webplayer implementation using an http analyser such as the "httpfox" plugin in Firefox or "developer tools" in Chrome.

Step-by-step instructions for running an http-request Analyser:

http analyzer

1. On a standard laptop / desktop device – Open the browser.

2. Using Chrome: Press ”CTRL+SHIFT+i”. This will bring the information screen along the bottom of the browser screen. (On other browser, you might need specific plugins. For example httpfox on Firefox browser).

3. The info screen contains several tabs across the page, but the one that matters is “network”. Select “network”.

4. In the main browser window - Open the player being tested, i.e. your webplayer

5. As soon as you load the webpage, you will notice a stream of events occurring in the information screen along the bottom of the screen.

6. Click in the information screen to make sure it has focus, now click on the filter icon. This will allow you to enter a value in the search box.

7. Enter “2cnt.net” (this is the receiving server) and click the option “filter”. You will now see only the requests going and coming to and from the project systems.

8. If you return focus to the player you can now test the various functions (pause, rewind, fast forward etc.) and watch the results in the http-request data scrolling along the information screen.

9. This data is NOT captured automatically so you MUST copy and paste ALL http-requests after the test has been completed; this data should be shared with us in order for the implementation to be signed off.

You can see an example of the heartbeats sent from the Kantar libraries here:

kantarmedia.atlassian.net/wiki/spaces/public/pages/159727225/BARB+TVPR+Test+Tool#BARBTVPRTestTool-Heartbeatssentfromthelibraries

Once verification is complete your implementation can move to the next QA stage.

Mobile App Streaming Measurement implementations:

For mobile streaming apps, a data verification test tool has been developed by Kantar for use by the Broadcaster development groups. It allows you to analyze and test the output records generated by the Kantar library app integrated within your player in real-time.

You can find detailed implementation and operation instructions here: Stream Testing

You can access the app test tool directly here: Streaming App Test Tool

This document covers:

- The heartbeats sent from the Kantar libraries

- How to verify the accuracy of your implementation

- How to operate the Kantar Testing Tool

Once the log stream test tool verification is complete, your implementation can move to the next QA stage.

Go-Live Acceptance Tests

Before live release, your desktop or mobile app player integration must pass tailored acceptance tests to ensure it adheres to the desired project outcomes.

Every player works in subtly different ways and is often subject to customisation. Desired behaviours must be understood in the context of the functionality and user features specific to your player. The specific acceptance criteria will be determined as a result of discussions between developers and business analysts at both the Broadcaster and CSM/Kantar.

CSM/Kantar will support this process to assess desired behaviours, e.g. correct handling of stream interruptions. We will undertake acceptance testing of your desktop or mobile app player integration if you can make a staging/beta release available.

For mobile app streaming measurement implementations we recommend you share your build using the test flight platform:

We will provide our device/account details. Other methods or products for providing access to a pre-release version of your implementation are of course also accepted.

The table below describes example test scenarios.

Scenario | Description | Outcome | Notes | |

|---|---|---|---|---|

| a | OD stream, uninterrupted by buffering or commercials | 30 minute stream is viewed completely in one unbroken session, no pausing or buffering and no commercial breaks. | This process should test the library app is measuring the complete viewing stream, it will show both start position and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings). | i.e. the last reported position should be equal to the duration |

| b | OD stream, interrupted only by commercials | 30 minute stream is viewed completely in one unbroken session, with only commercials interrupting the programme stream. | This process should test the library app is measuring the complete viewing stream, it will show both start position, the breaks for the commercials and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also continues measuring correctly after each commercial break (mid/pre rolls). | i.e. the last reported position should be equal to the duration AND "uid" remains unchanged |

| c | OD stream paused <30 minutes | 30 minute stream is viewed for 15 minutes. The viewer pauses the content for <30 minutes then resumes the stream from the same point (point of pause), watching to the end of the stream. Commercials viewed as normal. | This process should test the library app is measuring the complete viewing stream, it will show both start position and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also resumes measuring at the correct position after the paused period (Minor). | |

| d | OD stream paused >30 minutes | 30 minute stream is viewed for 15 minutes. The viewer pauses the content for >30 minutes then resumes the stream from the same point (point of pause), watching to the end of the stream. Commercials viewed as normal. | This process should test the library app is measuring the complete viewing stream, it will show both start position and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also resumes measurement at the correct position after the paused period (Major). The 30 min period used for the test can/should be extended if the player has internal “sleep” functions enabled. | |

| e | OD stream on mobile device, background/foreground <30 minutes | The user begins watching a stream on a mobile device. After 10 minutes (position in stream = 00:10:00) the user sends the app to the background and uses a different app. 5 mins later the user returns the player app to the foreground and continues viewing the same programme. The player app itself has remembered the position in stream and begins again from position 00:10:01. The user completes viewing of the programme stream uninterrupted until the end. | This process should test the library app is measuring the complete viewing stream, it will show both start position, the breaks for the commercials and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also continues measuring correctly after each commercial break (mid/pre rolls). It should also indicate if the process of foreground / background activities is being measured correctly after a minor period of time. | |

| f | OD stream on mobile device, background/foreground >30 minutes | The user begins watching a stream on a mobile device. After 10 minutes (position in stream = 00:10:00) the user sends the app to the background and uses a different app. 60 mins later the user returns the player app to the foreground and continues viewing the same programme. The player app itself has remembered the position in stream and begins again from position 00:10:01. The user completes viewing of the programme stream uninterrupted until the end. | This process should test the library app is measuring the complete viewing stream, it will show both start position, the breaks for the commercials and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also continues measuring correctly after each commercial break (mid/pre rolls). It should also indicate if the process of foreground / background activities is being measured correctly after a major period of time. | |

| g | OD stream on PC device, “Shutting the lid” | The user begins watching a stream on a PC device. After 10 minutes (position in stream = 00:10:00) the user closes the laptop lid (for desk top you could simply press the power off button). This should be similar to sending the app to the background on a mobile device. 5 mins later the user opens the laptop and resumes watching the same programme. The player app should / may remember the position in stream and begins again from position 00:10:01. The user completes viewing of the programme stream uninterrupted until the end. | This process should test the library app is measuring the complete viewing stream, it will show both start position, the breaks for the commercials and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also continues measuring correctly after each commercial break (mid/pre rolls). It should also indicate if the process of “foreground / background” activities on a PC device is being measured correctly. | This test may need refinement to cater for any Windows peculiarities that may be active on the laptop. |

| h | OD asset segment viewed multiple times (“the football goal”) | The user begins viewing a 60 minute stream. After 5 minute viewing, the user FFWD 45 minutes into the stream. The user then continues viewing the stream for 5 minutes of the programme (content minutes 45 – 49). The user then RWDs the content back to minute 45 and watches the same 5 minutes again. This behavior is repeated 4 more times, leading to a total streaming of 35 minutes comprising 6 x 5 minute streaming of the same piece of content plus that original first 5 minutes, within a single asset. | This process should test the library app is measuring the complete viewing stream, it will show both start position, the breaks for the commercials and the final position in the log stream record. This will ensure the process is measuring the viewing until the end of the stream (NO false endings) and also continues measuring correctly after each commercial break (mid/pre rolls). It should also indicate if the process of multiple rewind activities is being measured correctly and measuring the correct period of viewing. | |

| i | OD Commercial segment viewed multiple times | The user begins viewing a 30 minute stream. After 1 minute viewing, the user FFWD past the first commercial break. (The commercials should / may play out as normal). The user then continues viewing the stream for 1 more minute of the stream. The user then RWDs the stream to a point immediately before the commercial break, then continues watching for 1 more minute. (The commercials should play out as normal). This behavior is repeated X more times, before stopping the stream. | This process should test the library app is correctly measuring the asset viewing stream, it will show both start position, the breaks for the commercials and the final position in the log stream record. This will ensure the process is measuring correctly after each commercial break (mid/pre rolls). It should also indicate if the process of multiple rewind activities is being measured correctly and measuring the correct period of viewing. | |

| j | OD cross-device | The user begins streaming a programme on a PC device. The stream is viewed for 15 minutes and then pause the content (position in stream = 00:15:00). Now move to using a tablet device and resume the stream viewed previously on the PC, but this time via the tablet player app. The tablet player should resume streaming of the same programme at the position of the pause on the PC device (position in stream = 00:15:01). The user completes viewing of the programme stream uninterrupted until the end. | This process should test the library app is measuring the viewing stream correctly from both devices, it will show both start positions, the breaks for the commercials and the final position in the log stream record for each device used. This will ensure the process is measuring correctly after each commercial break (mid/pre rolls), it should also highlight if the process is correctly measuring the device type change and the starting position of the resume activity. | |

| k | OD asset finish/auto-restart feature | When an on-demand stream concludes, the player automatically returns to the beginning of the stream and restarts the stream. | This process should test what happens when a player automatically restarts a programme asset. It is important to make sure that track method is correctly handled when the programme finishes (unload) and that tracking begins again when the asset auto-restarts (call trackMethod). A new View should be created. Where the auto-restarted content begins again and the stream progresses beyond the 3-sec point, a new AV Start will also be created. | |

| l | Cookie persistency | User playback multiple long-tail streams. Observe the cookie's persistence across different streaming sessions. | The process should test that cookie remains the same across all streaming sessions. Any changes to the cookie should be reported. |

The table below describes example test scenarios for live streams.

Scenario | Description | Outcome | |

|---|---|---|---|

| 1 | Live - View one stream 30 minutes | The user begins viewing a live stream, continues to play the stream for 30 minutes. | This process should test if the library app is correctly measuring live streaming. |

| 2 | Live - View multiple streams | The user begins viewing a live stream, continues to play the stream for 10 minutes, and then starts playing a different live stream. Continue to play this for another 10 minutes before stopping. | This process should test if the library app is correctly measuring live streams when multiple streams are played. |

| 3 | Live - Pause & resume | The user begins viewing a live stream, after 5 minutes pauses the session, 5 minutes later resumes the session. | This process should test if the library app is correctly measuring live stream on Pause & resume events. |

| 4 | Live - App backgrounded & Foregrounded | The user begins viewing a live stream, after 5 minutes minimises the app, returns to the app after 5 minutes. | This process should test if the library app is correctly measuring live stream on App background & foreground events. |

| 5 | Cookie persistency | User playback multiple long-tail streams. Observe the cookie's persistence across different streaming sessions. | The process should test that cookie remain the same cross all streaming sessions. Any changes to the cookie should be reported. |

We will provide written confirmation once acceptance tests have been successfully completed, signing off your implementation.

Publishing your player

With acceptance tests complete, you may now schedule your implementation for publication.

Please:

- Inform us in advance of the go-live date

- Change your site-specific sitename from test value to live measurement, e.g. sitename "sampletest" must be changed to "sample".

You will use your site-specific test sitename ("sampletest") for testing future upgrades in staging environments.

Live Operational Calibration

The Live Operational Calibration process is used to “calibrate” the project metrics in the weeks immediately after your player implementation goes live.

These are reviewed between the FSC and CSM/Kantar. At the end of the process, your data will be signed-off for publication on an ongoing basis. All calibration phase data and discussions are in the strictest confidence.

Managing Future Updates

Once your integration is live, library sensor code changes will be infrequent. Changes will largely be driven by software environment changes (e.g. introduction of Apple IDFA/IFV). When a new Kantar library becomes available, you will be notified whether the update is critical to the measurement and therefore mandatory, or whether an upgrade can be scheduled at your discretion.

Integration and testing of your player with the new Kantar library code must not take place on your live system – you must not use your live sitename at any point during the integration process. Your live service is protected during the testing stages by simply directing your test traffic to your alternative site-specific test sitename, e.g.

Test traffic directed to sitename: sampletest

Live traffic directed to sitename: sample

Once the upgrade implementation has been verified by all parties, you will be advised to switch to your standard sitename before publishing your upgraded player.

CUSTOMER DATA PRIVACY DOCUMENTATION

Here is an overview of all the types of data that is being collected and/or processed by Kantar in the context of the project.

- Stream metadata that are explicitly defined in the video players by the broadcasters. See at: General metadata tagging instructions.

- Stream metadata that are not explicitly defined in the video players by the broadcasters, but are rather automatically transferred to and via the libraries' functionalities:

- Device identifiers:

- From web players:

cookie-ID - From iOS devices:

Apple Advertising ID (IDFA) and the ID for Vendors (IFV) for iOS 6/7, mac address for iOS 5. All are MD5 hashed and truncated to 16 characters.

NOTE: full unencrypted ID's are never sent to our systems! - From Android devices:

Google Advertising ID, Android ID and Device ID (MD5 hashed and trunctated to 16 characters)

NOTE: full unencrypted ID's are never sent to our systems!

- From web players:

- Screen resolution (!= stream resolution)

- Viewtime, contact time with the player, including all buffering, pausing, advertisement. This is different from "playtime"!

- Device identifiers:

- Data that are inherent to internet traffic and the measurement process:

- IP-addresses are used for processing, but they are not saved. They are used for:

- Geo-location of users.

- Determining the ISP of users.

- When no cookie is accepted: creating a browser fingerprint based on IP+browser user agent.

- Browser user agent where applicable.

- Timestamps when heartbeats are received.

- IP-addresses are used for processing, but they are not saved. They are used for:

Data Collection and Aggregation

Kantar publishes only aggregated results. There will be no device identification possible within the published results.

Informing Users

With regards to data privacy, there is an obligation to inform the user that the application monitors the user actions and transmits them to a measuring system. Furthermore, the user must be informed that he has the possibility to switch of the tracking in the application and how to do this.

For this purpose, you can use the following example text in an appropriate place in your app implementation:

Our app uses the "mobile app streaming sensor" of Kantar Germany GmbH, to gather statistics about the usage of our site. This data is collected anonymously.

This measurement of the mobile usage uses an anonymized device identifier for recognition purposes. To ensure that your device ID can not be clearly identified in our systems, it is encrypted and will be reduced by half. Only the encrypted and shortened device identifier is used in this measurement context.

This mobile measurement was developed under the observance of data protection laws. The aim of the measurement is to determine the intensity of use, the extent of use and the number of users of a mobile application. At no time, individual users will be identified. Your identity is always protected. You get no advertising by this system.

You can opt-out of the measurement by our app with the following activation switch.

Please note that only the measurement of our app is disabled. It may be that you will continue to be measured by other broadcasters using the "mobile app streaming sensor".

Opt-Out on Mobile Applications

The application developer has to give users the ability to stop the further tracking of the user actions

The library offers the following method to do this:

/** * When the value <code>false</code> is specified, the sending of * requests to the measuring system is switched off. * This value is <code>true</code> by default. */public void setTracking(boolean tracking) { }/** * Delivers the value <code>true</code> when the tracking * is activated otherwise the value is <code>false</code>. */public boolean isTracking() { } |

A persistent saving of the opt-out decision in the library is not provided and needs to be implemented by the app developer.

Opt-Out on Desktop Player

In the web-environment, the opt-out mechanism uses a specific cookie content to identify the client who does not want to be measured. Due to the fact that a direct and/or standardized way of recognition of the client is not provided, there is no other way to identify such clients.

A client is able to change his identity anytime (e.g. by cookie deletion). Such an identity change always leads to a loss of specific settings of the initial clients (causing them to appear in the system again from the moment of change).

Therefore it is necessary that the client who refuses measurement, communicates this setting to the system constantly. This means that the client may not delete his specific cookie.

The optout page for the project is located at http://optout.2cnt.net/. Any broadcaster can link to or embed that page on their own pages.

More information can be found at https://kantarmedia.atlassian.net/wiki/spaces/public/pages/159726816/OPT-OUT+and+Anonymization.

TBC...